-

By Ruchi Pardal

-

~ 7 minutes read

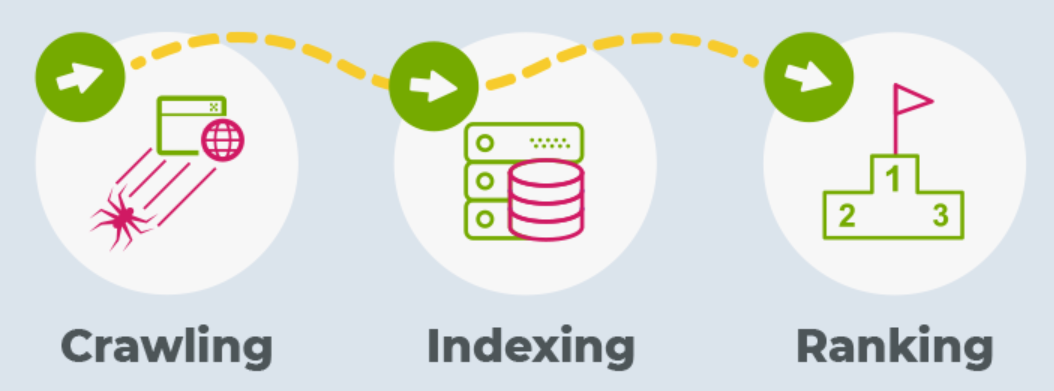

Crawlability simply means how Search Engines access and navigate your website pages, allowing your content to be discovered, indexed, and shown across various search platforms.

However, the rise of AI search has made crawlability even more crucial for visibility and ranking. The reason is that modern AI search tools rank sites higher when their pages are quick and simple to crawl.

Before your site is mentioned, cited, and recommended by AI search engines, it’s important that crawlers properly understand and interpret your content. No matter how strong your traditional SEO strategies are, your website must be easy for crawlers to access and interpret for effective AI SEO optimization and better ranking performance.

So, let’s try to understand how AI crawlers work, what blocks them from accessing your website, and what steps you can follow to ensure that your site is crawled and understood by AI.

It’s important to understand that AI crawlers are different from traditional SEO crawlers used by Google or Bing. Answer engines like AI Overviews or LLMs (ChatGPT, Perplexity AI, Claude) are making people’s search experiences more powerful and simpler. They don’t just offer simple links; instead, they synthesize answers, cite sources, and recommend brands.

Note: Many AI systems don’t provide a “re-index request” pathway as Google Search Console does. This means once you publish the content, initial crawlers decide how it is interpreted. If your content, structure, metadata, or links are not optimized at the time, your content may not surface for AI search models to index until the next automated crawl, which can take some time.

So, if you’re wondering how AI bots differ from Google and other traditional bots in terms of crawling, here are some of the main differences that can help you make better crawling fixes:

Various technical issues can restrict or block AI crawlers from accessing, indexing, and understanding your content, directly impacting your AI SEO Optimization efforts. Some of the leading factors that block AI crawlers include:

Just small technical improvements, along with good AI SEO optimization, can allow AI bots to better crawl through your website or content:

Understand, your AI SEO optimization is of no use if you don’t have any idea of what is broken. Your aim is visibility, and for that, you need to know how your content is performing and if any blockers are stopping it from getting recognized by AI and LLMs. Here is what you can do:

For traditional SEO, you have Google Search Console to know if the bot has visited the page. But for AI search, the scenario may be uncertain because user-agents of AI crawlers are relatively new and can often be missed by standard analytics. So you have to continuously monitor and identify crawlers from OpenAI, Perplexity, and other answer engines.

Create a custom segment that alerts you when pages are published without using relevant schema. Add schema to the key pages to allow answer engine bots to properly understand your content.

It allows you to flag pages that are generally not visited by LLMs in days or months. It will also help you identify technical or content-related issues that have very little chance of being cited.

Gaining mentions and citations in AI answers require proper planning with good AI SEO optimization strategies to increase the chances of your content being crawled and interpreted by AI. Here are certain tips to improve:

The search environment is changing drastically. We are moving away from when scheduled crawlers and traditional rankings used to determine our online performance. The moment an AI bot crawls through your website, it determines whether the content is cited, discovered, or ignored. AI crawlers decide which content will get surfaced on different search platforms.

As search algorithms change instantly, having a proactive AI SEO optimization strategy can make all the difference. By keeping a consistent track of crawl activity, reducing crawl waste, and adding clear schema and author signals in your content, you are likely to be seen, understood, and cited by answer engines.

Want to strengthen your visibility in AI Search? Choose ResultFirst, your professional AI SEO agency that focuses on making your content contextually relevant and technically optimized to make it easier for AI bots to crawl. From structured metadata to accurate schema implementation, ResultFirst uses various AI SEO optimization strategies to get your website cited, mentioned, and recommended across various AI platforms.

Crawlability makes it possible for the AI crawlers to access, understand, and index your website content very effectively. Thus, the process of crawlability results in a boost to AI SEO optimization, which later on results in more visibility on the platforms and engines that rely on AI for search and answers, respectively.

AI crawlers work on contextual accuracy of raw HTML contents and formal data, whereas Google bots have the capability of rendering JavaScript. AI bots are focused on clarity, schema, and speedy accessibility.

JavaScript overuse, lack of schema, bad site architecture, broken links, sluggish page speed, and missing schema might block AI crawlers and diminish the visibility as well as hurt your AI SEO optimization process.

Implement server-side rendering, conduct metadata optimization, repair broken links, guarantee quick load times, and add structured schema markup to clarify your site for AI crawlers.

AI crawlers are more common than traditional bots since they scan websites more often; however, no manual re-index option exists. That is why it is important to be accurate in technical and content matters during the publication.